Facebook launches investigation into moderation of disturbing content

An undercover investigation revealed moderators at Cpl Resources were instructed not to remove extreme, abusive or graphic content from the platform.

Facebook has launched an investigation into a contractor after it instructed its moderators not to remove extreme, abusive or graphic content from the platform.

Company executives said they will review and change its processes and policies to make the social media site more safe and secure.

Niamh Sweeney, Facebook Ireland head of public policy, told a parliamentary committee that suggestions the tech giant turns a blind eye to disturbing content were “categorically untrue”.

It came as Facebook apologised for allowing disturbing content to remain on its site.

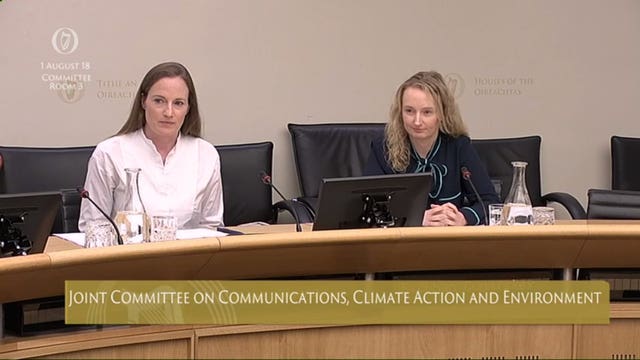

Ms Sweeney and Siobhan Cummiskey, head of content policy of Europe, the Middle East and Africa, appeared before the Irish Communications Committee to answer questions about its content moderation policy of violent and harmful content.

A documentary on Britain’s Channel 4’s The Dispatches programme, Inside Facebook, used hidden-camera footage to show how content moderation practices are taught and applied within the company’s operations in Dublin.

An undercover reporter worked at Cpl Resources in Dublin, Facebook’s largest centre for Ireland and UK content.

It emerged in the investigation that Facebook moderators were instructed not to remove extreme, abusive or graphic content from the platform even when it violated the company’s guidelines.

This included violent videos involving assaults on children, racially charged hate speech and images of self-harm among underage users.

Ms Sweeney told the committee she and her colleagues were “upset” by what was reported in the programme.

“If our services are not safe, people won’t share content with each other, and, over time, would stop using them,” she said.

“Nor do advertisers want their brands associated with disturbing or problematic content, and advertising is Facebook’s main source of revenue.”

For six weeks the undercover reporter attended training sessions and filmed conversations in the offices.

One video showed a man punching or stamping on a screaming toddler.

The moderators marked it as disturbing and allowed it to remain online and used it as an example of acceptable content.

Ms Sweeney admitted disturbing content of violent assaults and racially-charged hate speeches that were allowed to remain on its platform was a betrayal of Facebook’s own standards.

She said the social media giant was not aware a video of a young toddler being assaulted by an adult was being used as an example to the type of content that was allowed to remain on its site.

“We understand that what I am saying to you has been undermined by the comments captured on camera by the Dispatches reporter,” Ms Sweeney said.

“We are in the process of an internal investigation to understand why some actions taken by Cpl were not reflective of our policies and the underlying values on which they are based.”

She told the committee that Facebook is investing heavily in new technology to help deal with disturbing content.

Ms Sweeney also said the guidance given by trainers to its moderators were incorrect.

The committee heard that while the decision not to remove the video of the three-year-old was a mistake, there are a “narrow set of circumstances” in which Facebook would allow the video to be shared.

She explained this would happen if the child was still at risk, and there is a chance the child and perpetrator could be identified to law enforcement.

However chair of the committee hearing, Hildegarde Naughton TD, said it was not acceptable that Facebook would be the “sole arbitraries” in relation to what can and cannot remain online.

She said: “To say they will leave harmful, abusive and illegal material online to find the perpetrator is not acceptable and not acceptable to the people who see it online.

“It’s up to the law enforcement agencies and up to Facebook to contact the relevant child protection agency, all of those procedures need to be set up.”

After the programme was aired, Facebook said it has made changes to its processes and policies.

This includes flagging users who are suspected of being under the age of 13 and putting their accounts on hold while their policy on removing child abuse videos is also under review.

Ms Sweeney went on to say it is carrying out an internal investigation with Cpl to establish how the “gaps between our policies and values” and the training Cpl happened.

The staff at the centre of the Dispatches show were also “encouraged” to take time off.

Other steps taken by the tech giant include retraining Cpl trainers, revising their training materials, introducing new quality control measures and holding an audit to identify any repeat failings by Cpl over the last six months.

Ms Sweeney added that while a number of issues were raised in the Channel 4 programme, their system was “working”.

She continued: “I wouldn’t like to say that the system is broken entirely. We have had some amount of success in regulating areas.”

She said their most effective has been in child sexual exploitation.

“The vast majority people on Facebook don’t use it in the way we are listening to today,” she added.

“They don’t encounter the type of people we are discussing.”