Molly Russell entered ‘dark rabbit hole of suicidal content’ online, says father

Instagram said it has removed double the amount of material relating to self-harm and suicide since the start of this year.

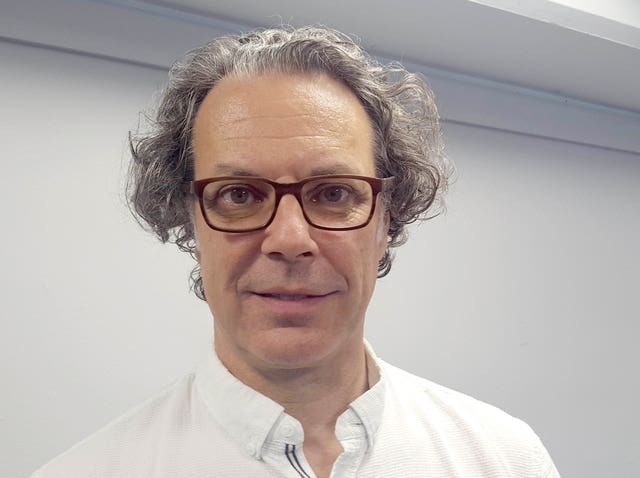

A 14-year-old British girl who took her own life after viewing harmful images on Instagram had entered a “dark rabbit hole of depressive suicidal content”, her father said.

Molly Russell killed herself in 2017 after viewing graphic content on the Facebook-owned platform.

Her father, Ian, said he believed Instagram was partly responsible for her death.

Speaking to BBC News, Mr Russell said: “I think Molly probably found herself becoming depressed.

“She was always very self-sufficient and liked to find her own answers. I think she looked towards the internet to give her support and help.

“She may well have received support and help, but what she also found was a dark, bleak world of content that accelerated her towards more such content.”

Mr Russell claimed the algorithms used by some online platforms “push similar content towards you” based on what you have been previously looking at.

He said: “I think Molly entered that dark rabbit hole of depressive suicidal content.

“Some were as simple as little cartoons – a black and white pencil drawing of a girl that said ‘Who would love a suicidal girl?’.

“Some were much more graphic and shocking.”

Mr Russell said he was “really pleased” that Instagram was taking a positive step forward in removing harmful posts.

Instagram said it had removed double the amount of material related to self-harm and suicide since the start of this year.

Between April and June 2019, it said it removed 834,000 pieces of content, 77% of which had not been reported by users.

But Mr Russell added: “It would be great if they could find a way to take down 10 times the number of posts and really reduce the potentially harmful content that is on their platform.”

Instagram boss Adam Mosseri said: “Nothing is more important to me than the safety of the people who use Instagram.

“We aim to strike the difficult balance between allowing people to share their mental health experiences – which can be important for recovery – while also protecting others from being exposed to potentially harmful content.”

Mr Mosseri said Instagram had expanded its policy to ban fictional self-harm or suicide content including memes and illustrations, and content containing methods or materials.

Mr Russell urged parents to speak with their children about what they are viewing online and how they are accessing it.

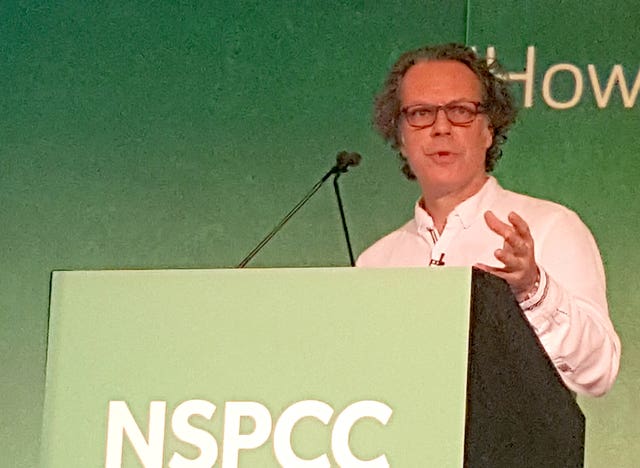

Andy Burrows, NSPCC head of child safety online policy, said that, while Instagram had taken “positive” steps, the rest of the tech industry had been “slow to respond”.

“That is why the Government needs to introduce a draft Bill to introduce the Duty of Care regulator by next Easter and commit to ensuring it tackles all the most serious online threats to children,” he said.